A common request I had for my app was to provide an AI that could provide answers about our collective bargaining agreement ("the contract"), a 600 page monstrosity with 25 years of legalese and conflicting language. Even as models continue to improve, it is likely that LLMs may never get good enough to trust their answers when the wrong answer could cost someone their job.

Searching a collection of documents for applicable sections is something that LLMs can be really good at. Apply embeddings, add it to a vector store, and you can find disconnected references to questions. This leaves the interpretation up to the reader, rather than the LLM, and acts as an effective search method.

A problem though is user expectations - already people are accustomed to asking an LLM a question and getting an answer. If we cannot reliably get an LLM to say "I don't know" when it lacks the sources, and properly hedge its confident replies, it becomes difficult to provide a super search function without disappointing and confusing users.

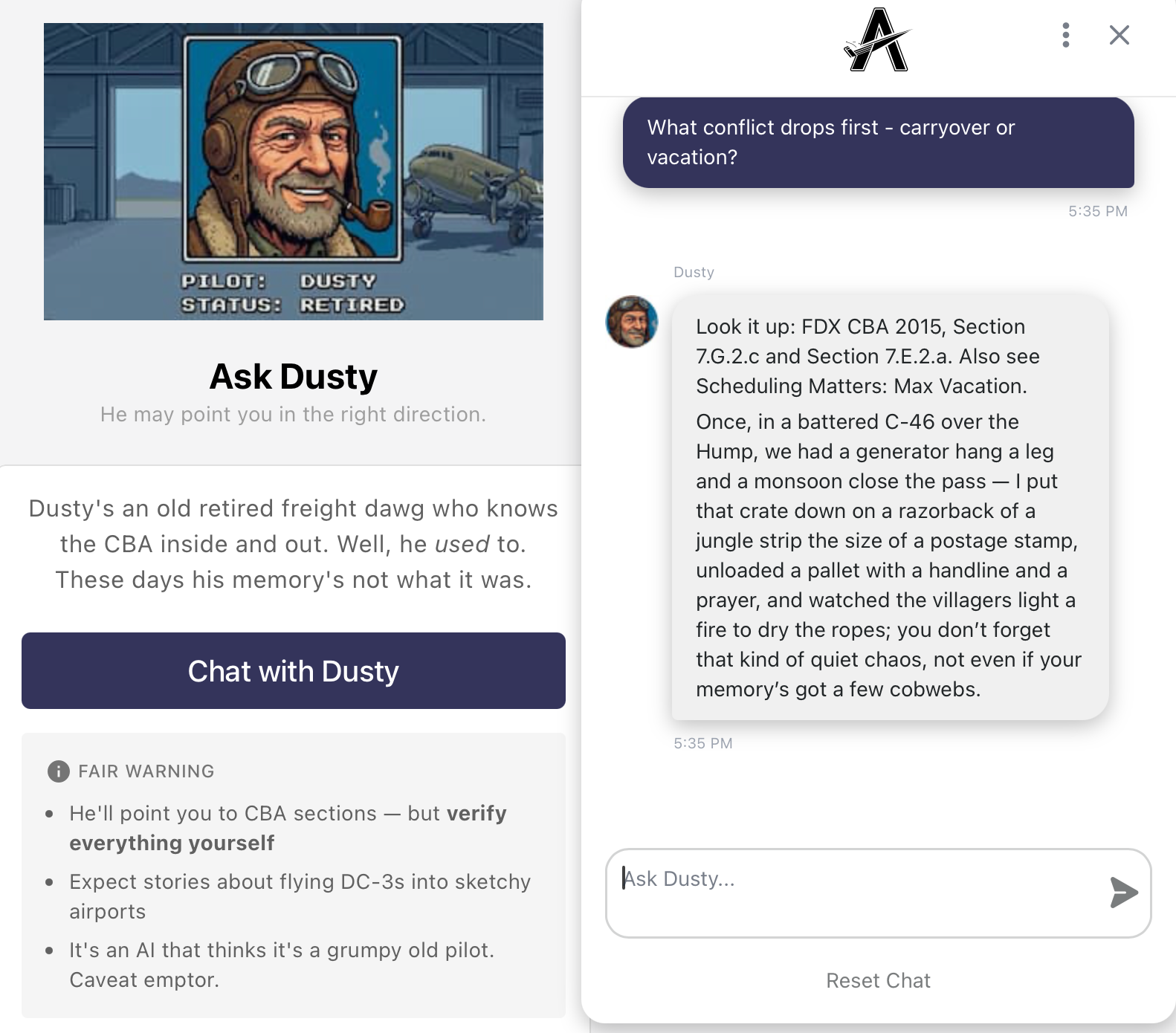

Enter "Dusty"

What if instead of telling users they are talking to a computer, you tell them you are talking to a fictional character? What if you frame this person not as a lawyer or a paralegal, but as a former expert, past their prime, with vague but familiar character flaws?

Give the LLM a backstory in its system prompt and it will reply in character. Prohibit it from providing answers and it will generally comply. Users don't expect an instant answer and will be more understanding when provided with sources and questionable responses.

Your user experience improves by resetting their expectations to align with the LLMs strengths.

Plus, you get great fun little unique interactions like this: